The IEEE Computational Intelligence Magazine (CIM) publishes peer-reviewed articles that present emerging novel discoveries, important insights, or tutorial surveys in all areas of computational intelligence design and applications, in keeping with the Field of Interest of the IEEE Computational Intelligence Society (IEEE/CIS). Additionally, CIM serves as a media of communications between the governing body and its membership of IEEE/CIS. Authors are encouraged to submit papers on applications oriented developments, successful industrial implementations, design tools, technology reviews, computational intelligence education, and applied research.

The IEEE Computational Intelligence Magazine (CIM) publishes peer-reviewed articles that present emerging novel discoveries, important insights, or tutorial surveys in all areas of computational intelligence design and applications, in keeping with the Field of Interest of the IEEE Computational Intelligence Society (IEEE/CIS). Additionally, CIM serves as a media of communications between the governing body and its membership of IEEE/CIS. Authors are encouraged to submit papers on applications oriented developments, successful industrial implementations, design tools, technology reviews, computational intelligence education, and applied research.

Contributions should contain novel and previously unpublished material. The novelty will usually lie in original concepts, results, techniques, observations, hardware/software implementations, or applications, but may also provide syntheses or new insights into previously reported research. Surveys and expository submissions are also welcome. In general, material which has been previously copyrighted, published or accepted for publication will not be considered for publication; however, prior preliminary or abbreviated publication of the material shall not preclude publication in this journal.

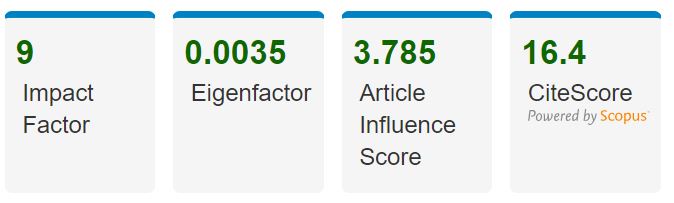

Impact Score

The values displayed for the journal bibliometrics fields in IEEE Xplore are based on the Journal Citation Report from Clarivate from the 2022 report released in June 2023. The values displayed for CiteScore metrics are from Scopus 2022 report released in June 2023. Journal Citation Metrics Journal Citation Metrics such as Impact Factor, Eigenfactor Score™ and Article Influence Score™ are available where applicable. Each year, Journal Citation Reports© (JCR) from Thomson Reuters examines the influence and impact of scholarly research journals. JCR reveals the relationship between citing and cited journals, offering a systematic, objective means to evaluate the world's leading journals. Find out more about IEEE Journal Rankings.

Special Issues

Advancing Computational Intelligence in Autonomous Learning and Optimization Systems [Call for Papers]

Guest Editors: Yaqing Hou, Liang Feng, and Yi Mei

Submission Deadline: July 1, 2024

Featured Paper

How Good is Neural Combinatorial Optimization? A Systematic Evaluation on the Traveling Salesman Problem

Shengcai Liu, Yu Zhang, Ke Tang, and Xin Yao

IEEE Computational Intelligence Magazine (Volume: 18, Issue: 3, August 2023)

Abstract:Traditional solvers for tackling combinatorial optimization (CO) problems are usually designed by human experts. Recently, there has been a surge of interest in utilizing deep learning, especially deep reinforcement learning, to automatically learn effective solvers for CO. The resultant new paradigm is termed neural combinatorial optimization (NCO). However, the advantages and disadvantages of NCO relative to other approaches have not been empirically or theoretically well studied. This work presents a comprehensive comparative study of NCO solvers and alternative solvers. Specifically, taking the traveling salesman problem as the testbed problem, the performance of the solvers is assessed in five aspects, i.e., effectiveness, efficiency, stability, scalability, and generalization ability. Our results show that the solvers learned by NCO approaches, in general, still fall short of traditional solvers in nearly all these aspects. A potential benefit of NCO solvers would be their superior time and energy efficiency for small-size problem instances when sufficient training instances are available. Hopefully, this work would help with a better understanding of the strengths and weaknesses of NCO and provide a comprehensive evaluation protocol for further benchmarking NCO approaches in comparison to other approaches.

Index Terms: Training, Deep learning, Systematics, Protocols, Scalability, Benchmark testing, Traveling salesman problems

IEEE Xplore Link:https://ieeexplore.ieee.org/document/10188470

Jack and Masters of all Trades: One-Pass Learning Sets of Model Sets From Large Pre-Trained Models

Han Xiang Choong, Yew-Soon Ong, Abhishek Gupta, Caishun Chen, and Ray Lim

IEEE Computational Intelligence Magazine (Volume: 18, Issue: 3, August 2023)

Abstract: For deep learning, size is power. Massive neural nets trained on broad data for a spectrum of tasks are at the forefront of artificial intelligence. These large pre-trained models or “Jacks of All Trades” (JATs), when fine-tuned for downstream tasks, are gaining importance in driving deep learning advancements. However, environments with tight resource constraints, changing objectives and intentions, or varied task requirements, could limit the real-world utility of a singular JAT. Hence, in tandem with current trends towards building increasingly large JATs, this paper conducts an initial exploration into concepts underlying the creation of a diverse set of compact machine learning model sets. Composed of many smaller and specialized models, the Set of Sets is formulated to simultaneously fulfil many task settings and environmental conditions. A means to arrive at such a set tractably in one pass of a neuroevolutionary multitasking algorithm is presented for the first time, bringing us closer to models that are collectively “Masters of All Trades”.

Index Terms: Deep learning, Machine learning algorithms, Computational modeling, Neural networks, Multitasking, Market research, Neural networks, Training data

IEEE Xplore Link: https://ieeexplore.ieee.org/document/10188456